Oterm

by ggozad

Interact with Ollama models through an intuitive terminal UI, supporting persistent chats, system prompts, model parameters, and MCP tools integration.

Oterm Overview

What is Oterm about?

Oterm provides a lightweight, cross‑platform terminal interface for Ollama, enabling users to chat with local LLMs, customise system prompts, adjust model parameters, and leverage MCP tools without running additional servers or front‑ends.

How to use Oterm?

- Install – the quickest method is:

uvx oterm

(Alternatively, install via Homebrew, pip, or from source.)

2. Run – simply type oterm in any supported terminal emulator (Linux, macOS, Windows).

3. Navigate – use the on‑screen menus to select a model, create or switch chat sessions, adjust settings, and invoke tools.

4. Persist – chats are stored in a SQLite database, preserving context and customisations across launches.

Key Features

- Intuitive TUI with multiple persistent chat sessions.

- Model selection and per‑model system‑prompt/parameter customization.

- Full MCP tools and prompts support, including streaming and "thinking" mode.

- Image inclusion and theme selection for a personalized experience.

- RAG example integration and Homebrew distribution.

Use Cases

- Rapid prototyping and experimentation with locally hosted LLMs.

- Knowledge‑base querying via RAG without leaving the terminal.

- Development workflows that need AI assistance directly in the command line.

- Debugging or testing custom Ollama models and prompts.

- Automating tool‑driven tasks (e.g., git operations) through MCP integrations.

FAQ

Q: Which operating systems are supported? A: Linux, macOS, and Windows with most terminal emulators.

Q: Do I need an internet connection? A: Only if the selected Ollama model requires remote resources; the client itself works offline.

Q: How are chats persisted? A: Chats, system prompts, and parameter settings are saved in a local SQLite database.

Q: Can I customise the appearance? A: Yes, Oterm supports multiple themes and UI styling options.

Q: How do I add custom tools? A: Use the MCP tools interface; Oterm can communicate with any MCP‑compatible server, including those you write yourself.

Oterm's README

oterm

the terminal client for Ollama.

Features

- intuitive and simple terminal UI, no need to run servers, frontends, just type

otermin your terminal. - supports Linux, MacOS, and Windows and most terminal emulators.

- multiple persistent chat sessions, stored together with system prompt & parameter customizations in sqlite.

- support for Model Context Protocol (MCP) tools & prompts integration.

- can use any of the models you have pulled in Ollama, or your own custom models.

- allows for easy customization of the model's system prompt and parameters.

- supports tools integration for providing external information to the model.

Quick install

uvx oterm

See Installation for more details.

Documentation

What's new

- Example on how to do RAG with haiku.rag.

otermis now part of Homebrew!- Support for "thinking" mode for models that support it.

- Support for streaming with tools!

- Messages UI styling improvements.

- MCP Sampling is here in addition to MCP tools & prompts! Also support for Streamable HTTP & WebSocket transports for MCP servers.

Screenshots

The splash screen animation that greets users when they start oterm.

The splash screen animation that greets users when they start oterm.

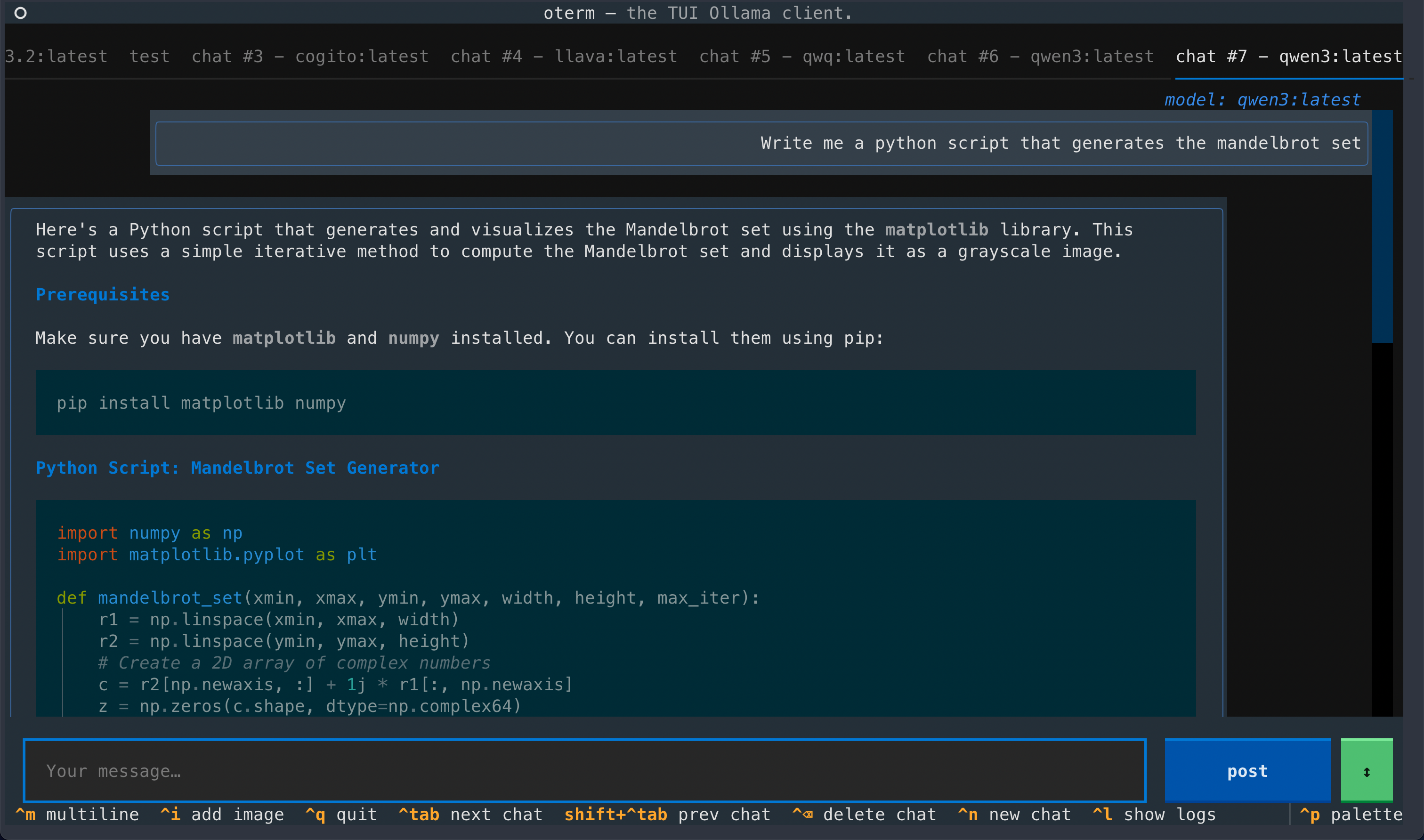

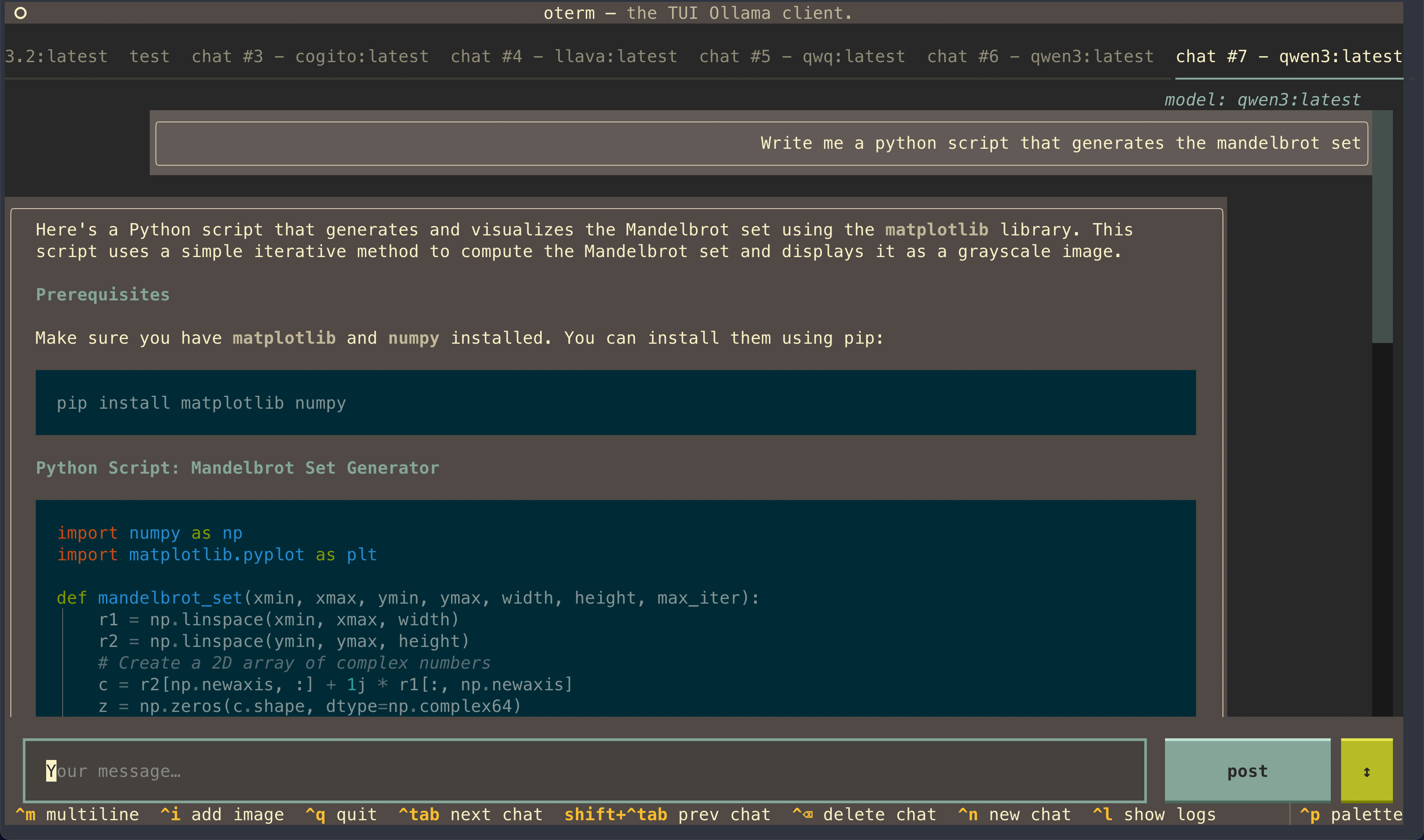

A view of the chat interface, showcasing the conversation between the user and the model.

A view of the chat interface, showcasing the conversation between the user and the model.

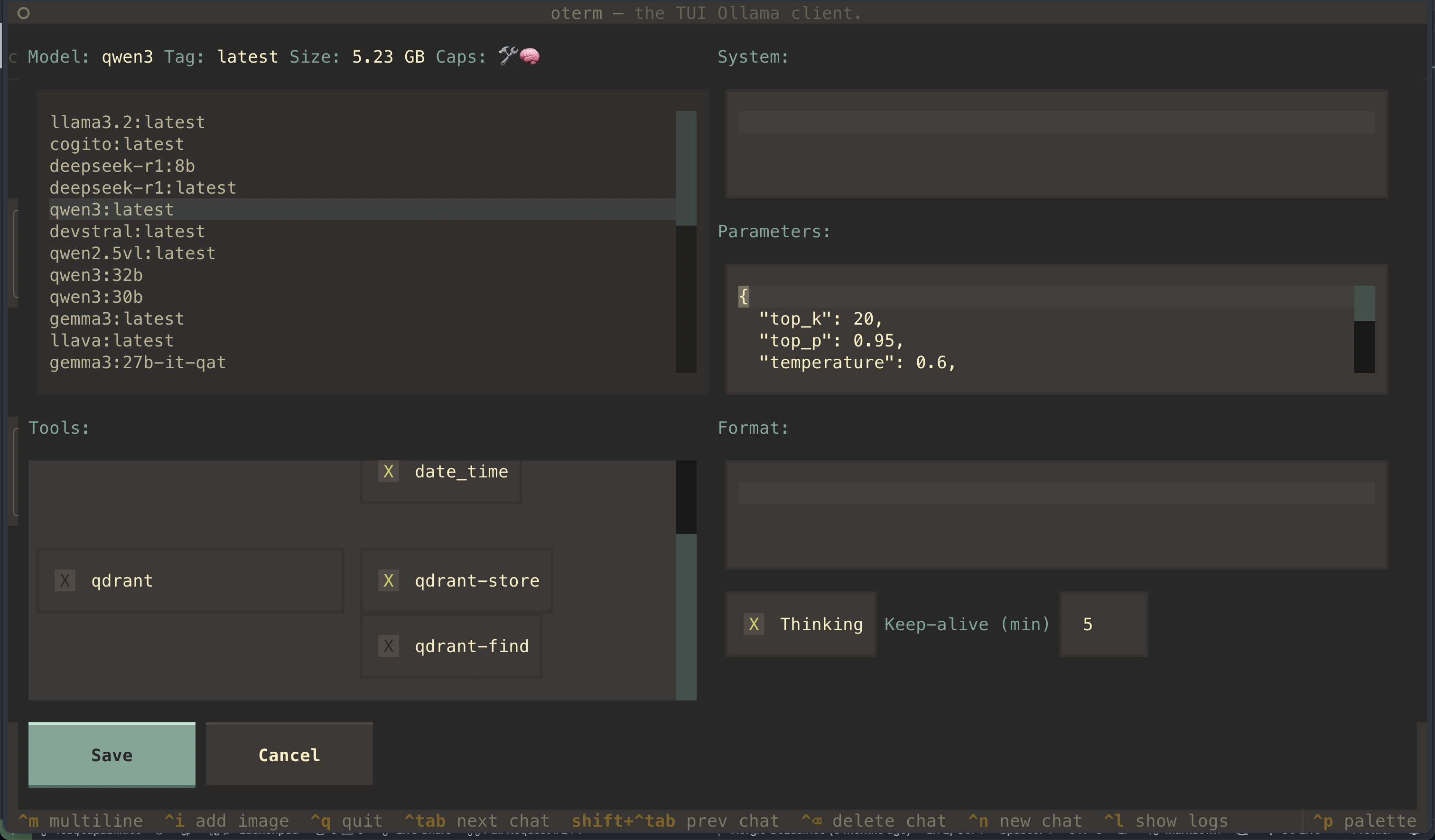

The model selection screen, allowing users to choose and customize available models.

The model selection screen, allowing users to choose and customize available models.

git MCP server to access its own repo.

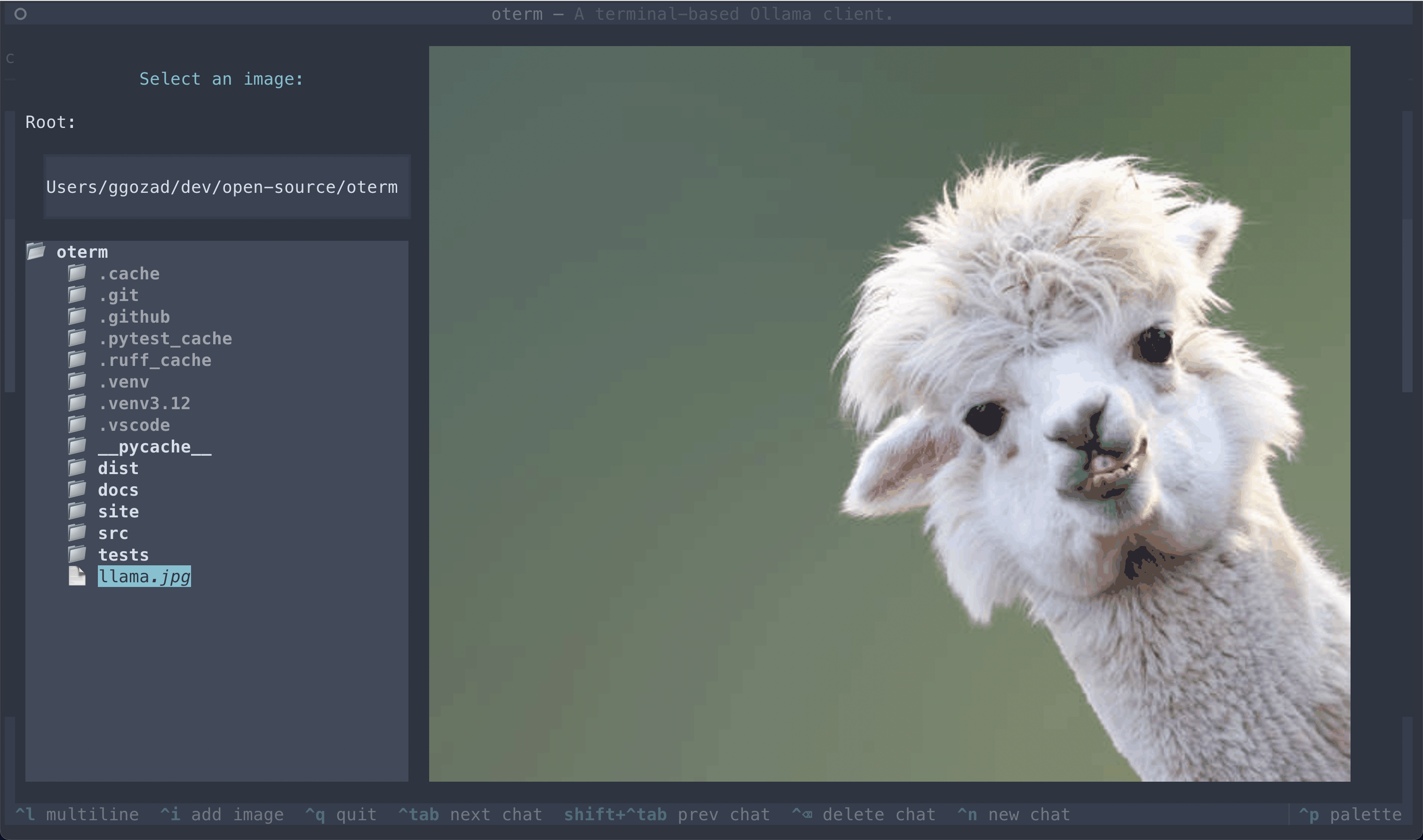

The image selection interface, demonstrating how users can include images in their conversations.

The image selection interface, demonstrating how users can include images in their conversations.

oterm supports multiple themes, allowing users to customize the appearance of the interface.

oterm supports multiple themes, allowing users to customize the appearance of the interface.

License

This project is licensed under the MIT License.

Oterm Reviews

Login Required

Please log in to share your review and rating for this MCP.

Related MCP Servers

Discover more MCP servers with similar functionality and use cases

Inbox Zero

by elie222

An AI‑powered email assistant that automates inbox management, enabling users to reach inbox zero fast by handling replies, labeling, archiving, unsubscribing, and providing analytics through a plain‑text prompt configuration.

Notion MCP Server

by makenotion

Provides a remote Model Context Protocol server for the Notion API, enabling OAuth‑based installation and optimized toolsets for AI agents with minimal token usage.

Atlassian

by sooperset

MCP Atlassian is a Model Context Protocol (MCP) server that integrates AI assistants with Atlassian products like Confluence and Jira. It enables AI to automate tasks, search for information, and manage content within Atlassian ecosystems.

Witsy

Clientby nbonamy

A desktop AI assistant that bridges dozens of LLM, image, video, speech, and search providers, offering chat, generative media, RAG, shortcuts, and extensible plugins directly from the OS.

Office PowerPoint MCP Server

by GongRzhe

Provides tools for creating, editing, and enhancing PowerPoint presentations through a comprehensive set of MCP operations powered by python-pptx.

Office Word MCP Server

by GongRzhe

Creates, reads, and manipulates Microsoft Word documents through a standardized interface for AI assistants, enabling rich editing, formatting, and analysis capabilities.

Gmail

by GongRzhe

Gmail-MCP-Server is a Model Context Protocol (MCP) server that integrates Gmail functionalities into AI assistants like Claude Desktop. It enables natural language interaction for email management, supporting features like sending, reading, and organizing emails.

Google Calendar

by nspady

google-calendar-mcp is a Model Context Protocol (MCP) server that integrates Google Calendar with AI assistants. It enables AI assistants to manage Google Calendar events, including creating, updating, deleting, and searching for events.

Tome

Clientby runebookai

Provides a desktop interface to chat with local or remote LLMs, schedule tasks, and integrate Model Context Protocol servers without coding.