Mcp Agent

by lastmile-ai

A lightweight, composable framework for building AI agents using Model Context Protocol and simple workflow patterns.

Mcp Agent Overview

What is Mcp Agent about?

Mcp Agent provides a simple, composable way to create AI agents that can call tools exposed by MCP servers. It abstracts away the complexity of managing MCP server lifecycles and implements all workflow patterns described in Anthropic's Building Effective Agents, plus OpenAI's Swarm pattern, all as interchangeable AugmentedLLM components.

How to use Mcp Agent?

- Install –

pip install mcp-agent(or useuv add "mcp-agent"). - Configure – create an

mcp_agent.config.yamldefining the execution engine, logger, MCP servers, and LLM defaults. - Write code – instantiate an

MCPApp, create one or moreAgentobjects with a name, instruction, and a list of MCP servers they can use. Attach an LLM (e.g.,OpenAIAugmentedLLM) to the agent and callgenerate_strorgeneratefor tool‑augmented responses. - Run – use an async context manager (

async with app.run()) to start the framework, then execute your agent logic.

import asyncio

from mcp_agent.app import MCPApp

from mcp_agent.agents.agent import Agent

from mcp_agent.workflows.llm.augmented_llm_openai import OpenAIAugmentedLLM

app = MCPApp(name="example")

async def main():

async with app.run() as run:

finder = Agent(name="finder", instruction="Read files and fetch URLs.", server_names=["fetch", "filesystem"])

async with finder:

llm = await finder.attach_llm(OpenAIAugmentedLLM)

result = await llm.generate_str("Show me README.md verbatim")

print(result)

asyncio.run(main())

Key Features of Mcp Agent

- Automatic MCP server management – start, stop, and reuse servers via

gen_clientor the built‑in connection manager. - All workflow patterns – Parallel, Router, Intent‑Classifier, Evaluator‑Optimizer, Orchestrator‑Workers, Swarm, and the basic AugmentedLLM.

- Composable design – each workflow is itself an

AugmentedLLM, allowing nesting and chaining. - Model‑agnostic – works with OpenAI, Anthropic, or any provider implementing the LLM interface.

- Human‑in‑the‑loop support – tools can request console or UI input via

human_input_callback. - Extensible logging – configurable console or file transports with JSONL output.

- Easy installation – single‑command pip/uv install, plus example projects.

Use Cases of Mcp Agent

- Building multi‑agent assistants that collaborate on a task (e.g., document review, fact‑checking, style enforcement).

- Creating RAG chatbots that query vector databases via MCP servers.

- Developing CLI or web front‑ends (Streamlit, Marimo) for interactive AI agents.

- Orchestrating complex business workflows such as email automation, ticket routing, or content generation pipelines.

- Prototyping AI‑driven copilots that need access to external tools like file I/O, web fetching, or custom APIs.

FAQ from the Mcp Agent

Q: Do I need an existing MCP client to use the library? A: No. Mcp Agent handles MCP client creation internally, so you can use it standalone or embed it in any MCP‑enabled host.

Q: Which LLM providers are supported?

A: Any provider that implements the AugmentedLLM interface; built‑in adapters exist for OpenAI and Anthropic.

Q: How are secrets managed?

A: Secrets can be stored in a git‑ignored mcp_agent.secrets.yaml or loaded from a .env file. For production, set MCP_APP_SETTINGS_PRELOAD to inject them at runtime.

Q: Can I run multiple agents concurrently? A: Yes. Agents are async context managers and can be started in parallel, especially when using the Parallel workflow.

Q: What if I need persistent server connections?

A: Use connect/disconnect or the MCPConnectionManager to keep servers alive across multiple tool calls.

Q: Is there support for streaming responses? A: Streaming listeners are planned in upcoming releases; current versions provide full‑response generation.

Mcp Agent's README

Overview

mcp-agent is a simple, composable framework to build agents using Model Context Protocol.

Inspiration: Anthropic announced 2 foundational updates for AI application developers:

- Model Context Protocol - a standardized interface to let any software be accessible to AI assistants via MCP servers.

- Building Effective Agents - a seminal writeup on simple, composable patterns for building production-ready AI agents.

mcp-agent puts these two foundational pieces into an AI application framework:

- It handles the pesky business of managing the lifecycle of MCP server connections so you don't have to.

- It implements every pattern described in Building Effective Agents, and does so in a composable way, allowing you to chain these patterns together.

- Bonus: It implements OpenAI's Swarm pattern for multi-agent orchestration, but in a model-agnostic way.

Altogether, this is the simplest and easiest way to build robust agent applications. Much like MCP, this project is in early development. We welcome all kinds of contributions, feedback and your help in growing this to become a new standard.

Get Started

We recommend using uv to manage your Python projects:

uv add "mcp-agent"

Alternatively:

pip install mcp-agent

Quickstart

[!TIP] The

examplesdirectory has several example applications to get started with. To run an example, clone this repo, then:cd examples/basic/mcp_basic_agent # Or any other example # Option A: secrets YAML # cp mcp_agent.secrets.yaml.example mcp_agent.secrets.yaml && edit mcp_agent.secrets.yaml # Option B: .env cp .env.example .env && edit .env uv run main.py

Here is a basic "finder" agent that uses the fetch and filesystem servers to look up a file, read a blog and write a tweet. Example link:

import asyncio

import os

from mcp_agent.app import MCPApp

from mcp_agent.agents.agent import Agent

from mcp_agent.workflows.llm.augmented_llm_openai import OpenAIAugmentedLLM

app = MCPApp(name="hello_world_agent")

async def example_usage():

async with app.run() as mcp_agent_app:

logger = mcp_agent_app.logger

# This agent can read the filesystem or fetch URLs

finder_agent = Agent(

name="finder",

instruction="""You can read local files or fetch URLs.

Return the requested information when asked.""",

server_names=["fetch", "filesystem"], # MCP servers this Agent can use

)

async with finder_agent:

# Automatically initializes the MCP servers and adds their tools for LLM use

tools = await finder_agent.list_tools()

logger.info(f"Tools available:", data=tools)

# Attach an OpenAI LLM to the agent (defaults to GPT-4o)

llm = await finder_agent.attach_llm(OpenAIAugmentedLLM)

# This will perform a file lookup and read using the filesystem server

result = await llm.generate_str(

message="Show me what's in README.md verbatim"

)

logger.info(f"README.md contents: {result}")

# Uses the fetch server to fetch the content from URL

result = await llm.generate_str(

message="Print the first two paragraphs from https://www.anthropic.com/research/building-effective-agents"

)

logger.info(f"Blog intro: {result}")

# Multi-turn interactions by default

result = await llm.generate_str("Summarize that in a 128-char tweet")

logger.info(f"Tweet: {result}")

if __name__ == "__main__":

asyncio.run(example_usage())

execution_engine: asyncio

logger:

transports: [console] # You can use [file, console] for both

level: debug

path: "logs/mcp-agent.jsonl" # Used for file transport

# For dynamic log filenames:

# path_settings:

# path_pattern: "logs/mcp-agent-{unique_id}.jsonl"

# unique_id: "timestamp" # Or "session_id"

# timestamp_format: "%Y%m%d_%H%M%S"

mcp:

servers:

fetch:

command: "uvx"

args: ["mcp-server-fetch"]

filesystem:

command: "npx"

args:

[

"-y",

"@modelcontextprotocol/server-filesystem",

"<add_your_directories>",

]

openai:

# Secrets (API keys, etc.) are stored in an mcp_agent.secrets.yaml file which can be gitignored

default_model: gpt-4o

Table of Contents

- Why use mcp-agent?

- Example Applications

- Core Concepts

- Workflows Patterns

- Advanced

- Contributing

- Roadmap

- FAQs

Why use mcp-agent?

There are too many AI frameworks out there already. But mcp-agent is the only one that is purpose-built for a shared protocol - MCP. It is also the most lightweight, and is closer to an agent pattern library than a framework.

As more services become MCP-aware, you can use mcp-agent to build robust and controllable AI agents that can leverage those services out-of-the-box.

Examples

Before we go into the core concepts of mcp-agent, let's show what you can build with it.

In short, you can build any kind of AI application with mcp-agent: multi-agent collaborative workflows, human-in-the-loop workflows, RAG pipelines and more.

Claude Desktop

You can integrate mcp-agent apps into MCP clients like Claude Desktop.

mcp-agent server

This app wraps an mcp-agent application inside an MCP server, and exposes that server to Claude Desktop. The app exposes agents and workflows that Claude Desktop can invoke to service of the user's request.

https://github.com/user-attachments/assets/7807cffd-dba7-4f0c-9c70-9482fd7e0699

This demo shows a multi-agent evaluation task where each agent evaluates aspects of an input poem, and then an aggregator summarizes their findings into a final response.

Details: Starting from a user's request over text, the application:

- dynamically defines agents to do the job

- uses the appropriate workflow to orchestrate those agents (in this case the Parallel workflow)

Link to code: examples/basic/mcp_server_aggregator

[!NOTE] Huge thanks to Jerron Lim (@StreetLamb) for developing and contributing this example!

Streamlit

You can deploy mcp-agent apps using Streamlit.

Gmail agent

This app is able to perform read and write actions on gmail using text prompts -- i.e. read, delete, send emails, mark as read/unread, etc. It uses an MCP server for Gmail.

https://github.com/user-attachments/assets/54899cac-de24-4102-bd7e-4b2022c956e3

Link to code: gmail-mcp-server

[!NOTE] Huge thanks to Jason Summer (@jasonsum) for developing and contributing this example!

Simple RAG Chatbot

This app uses a Qdrant vector database (via an MCP server) to do Q&A over a corpus of text.

https://github.com/user-attachments/assets/f4dcd227-cae9-4a59-aa9e-0eceeb4acaf4

Link to code: examples/usecases/streamlit_mcp_rag_agent

[!NOTE] Huge thanks to Jerron Lim (@StreetLamb) for developing and contributing this example!

Marimo

Marimo is a reactive Python notebook that replaces Jupyter and Streamlit. Here's the "file finder" agent from Quickstart implemented in Marimo:

Link to code: examples/usecases/marimo_mcp_basic_agent

[!NOTE] Huge thanks to Akshay Agrawal (@akshayka) for developing and contributing this example!

Python

You can write mcp-agent apps as Python scripts or Jupyter notebooks.

Swarm

This example demonstrates a multi-agent setup for handling different customer service requests in an airline context using the Swarm workflow pattern. The agents can triage requests, handle flight modifications, cancellations, and lost baggage cases.

https://github.com/user-attachments/assets/b314d75d-7945-4de6-965b-7f21eb14a8bd

Link to code: examples/workflows/workflow_swarm

Core Components

The following are the building blocks of the mcp-agent framework:

- MCPApp: global state and app configuration

- MCP server management:

gen_clientandMCPConnectionManagerto easily connect to MCP servers. - Agent: An Agent is an entity that has access to a set of MCP servers and exposes them to an LLM as tool calls. It has a name and purpose (instruction).

- AugmentedLLM: An LLM that is enhanced with tools provided from a collection of MCP servers. Every Workflow pattern described below is an

AugmentedLLMitself, allowing you to compose and chain them together.

Everything in the framework is a derivative of these core capabilities.

Workflows

mcp-agent provides implementations for every pattern in Anthropic’s Building Effective Agents, as well as the OpenAI Swarm pattern.

Each pattern is model-agnostic, and exposed as an AugmentedLLM, making everything very composable.

AugmentedLLM

AugmentedLLM is an LLM that has access to MCP servers and functions via Agents.

LLM providers implement the AugmentedLLM interface to expose 3 functions:

generate: Generate message(s) given a prompt, possibly over multiple iterations and making tool calls as needed.generate_str: Callsgenerateand returns result as a string output.generate_structured: Uses Instructor to return the generated result as a Pydantic model.

Additionally, AugmentedLLM has memory, to keep track of long or short-term history.

from mcp_agent.agents.agent import Agent

from mcp_agent.workflows.llm.augmented_llm_anthropic import AnthropicAugmentedLLM

finder_agent = Agent(

name="finder",

instruction="You are an agent with filesystem + fetch access. Return the requested file or URL contents.",

server_names=["fetch", "filesystem"],

)

async with finder_agent:

llm = await finder_agent.attach_llm(AnthropicAugmentedLLM)

result = await llm.generate_str(

message="Print the first 2 paragraphs of https://www.anthropic.com/research/building-effective-agents",

# Can override model, tokens and other defaults

)

logger.info(f"Result: {result}")

# Multi-turn conversation

result = await llm.generate_str(

message="Summarize those paragraphs in a 128 character tweet",

)

logger.info(f"Result: {result}")

Parallel

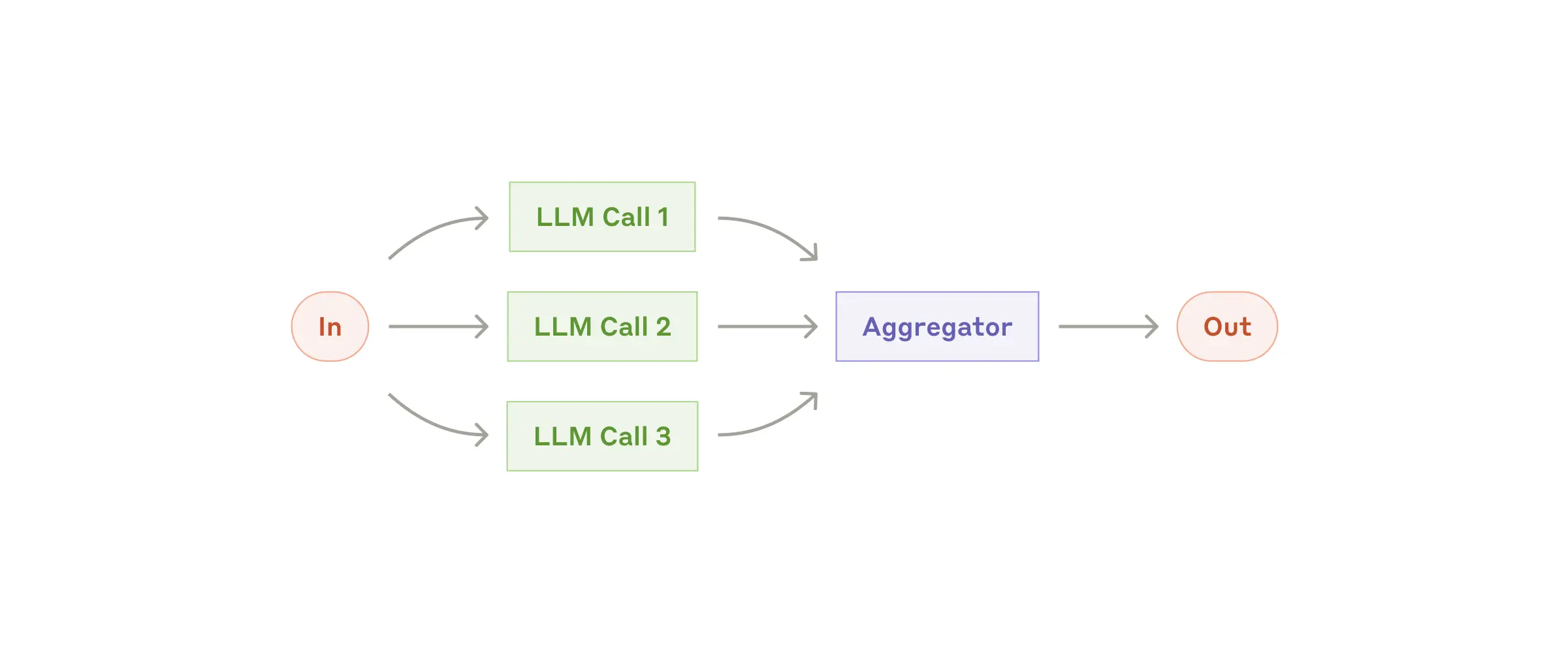

Fan-out tasks to multiple sub-agents and fan-in the results. Each subtask is an AugmentedLLM, as is the overall Parallel workflow, meaning each subtask can optionally be a more complex workflow itself.

[!NOTE]

proofreader = Agent(name="proofreader", instruction="Review grammar...")

fact_checker = Agent(name="fact_checker", instruction="Check factual consistency...")

style_enforcer = Agent(name="style_enforcer", instruction="Enforce style guidelines...")

grader = Agent(name="grader", instruction="Combine feedback into a structured report.")

parallel = ParallelLLM(

fan_in_agent=grader,

fan_out_agents=[proofreader, fact_checker, style_enforcer],

llm_factory=OpenAIAugmentedLLM,

)

result = await parallel.generate_str("Student short story submission: ...", RequestParams(model="gpt4-o"))

Router

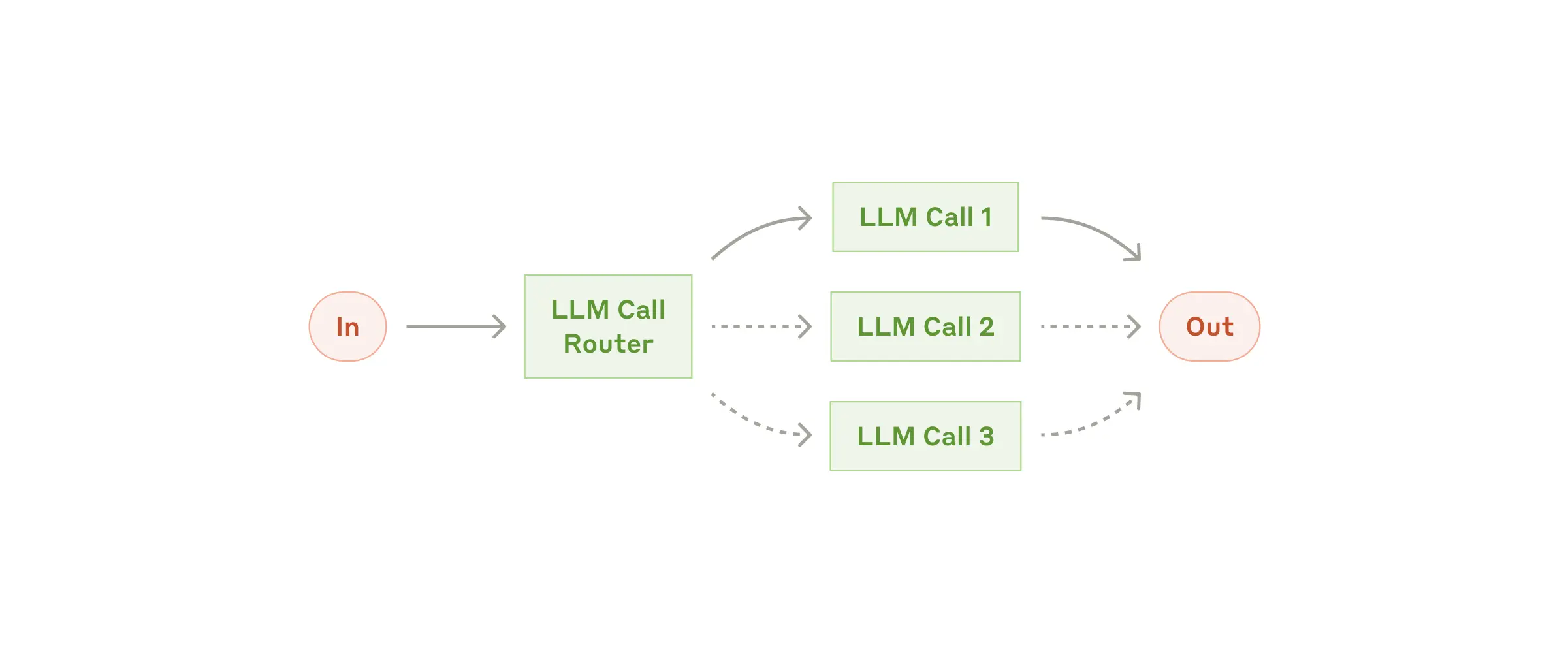

Given an input, route to the top_k most relevant categories. A category can be an Agent, an MCP server or a regular function.

mcp-agent provides several router implementations, including:

EmbeddingRouter: uses embedding models for classificationLLMRouter: uses LLMs for classification

[!NOTE]

def print_hello_world:

print("Hello, world!")

finder_agent = Agent(name="finder", server_names=["fetch", "filesystem"])

writer_agent = Agent(name="writer", server_names=["filesystem"])

llm = OpenAIAugmentedLLM()

router = LLMRouter(

llm=llm,

agents=[finder_agent, writer_agent],

functions=[print_hello_world],

)

results = await router.route( # Also available: route_to_agent, route_to_server

request="Find and print the contents of README.md verbatim",

top_k=1

)

chosen_agent = results[0].result

async with chosen_agent:

...

IntentClassifier

A close sibling of Router, the Intent Classifier pattern identifies the top_k Intents that most closely match a given input.

Just like a Router, mcp-agent provides both an embedding and LLM-based intent classifier.

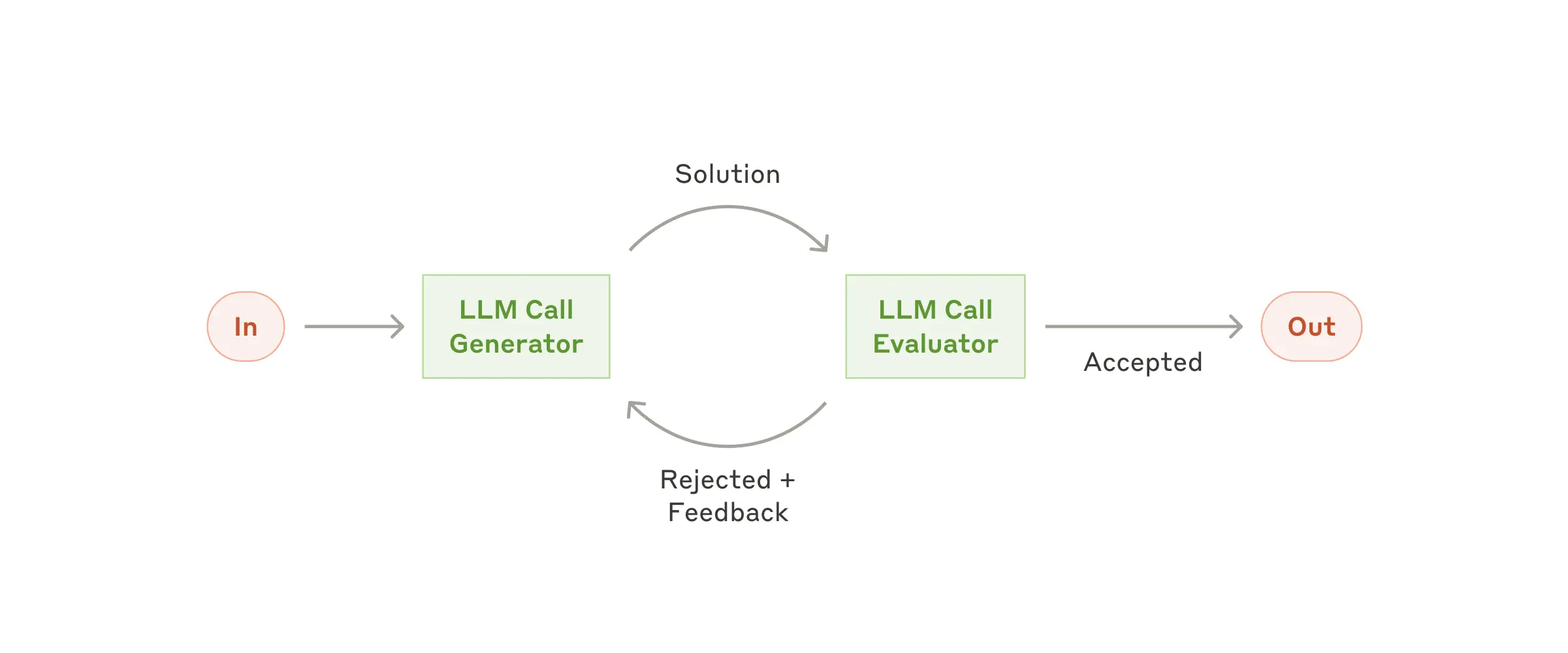

Evaluator-Optimizer

One LLM (the “optimizer”) refines a response, another (the “evaluator”) critiques it until a response exceeds a quality criteria.

[!NOTE]

optimizer = Agent(name="cover_letter_writer", server_names=["fetch"], instruction="Generate a cover letter ...")

evaluator = Agent(name="critiquer", instruction="Evaluate clarity, specificity, relevance...")

eo_llm = EvaluatorOptimizerLLM(

optimizer=optimizer,

evaluator=evaluator,

llm_factory=OpenAIAugmentedLLM,

min_rating=QualityRating.EXCELLENT, # Keep iterating until the minimum quality bar is reached

)

result = await eo_llm.generate_str("Write a job cover letter for an AI framework developer role at LastMile AI.")

print("Final refined cover letter:", result)

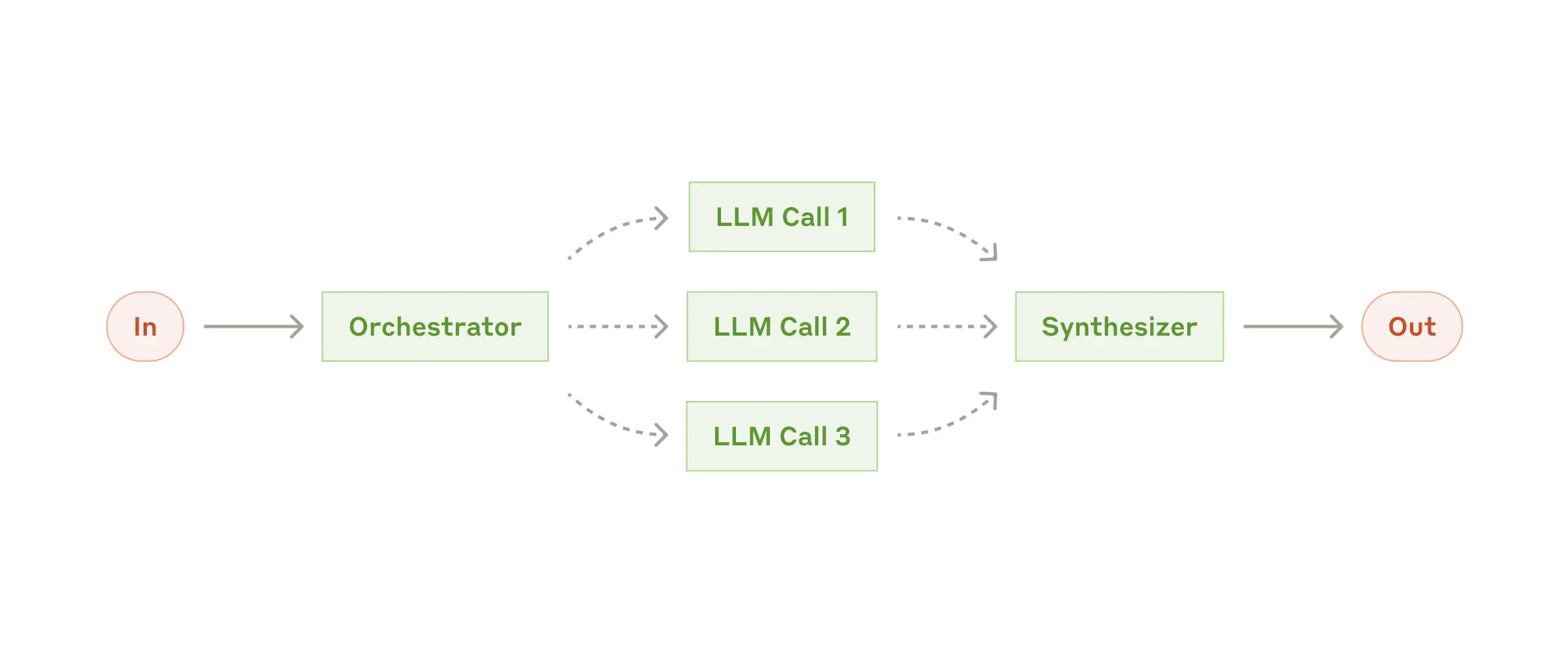

Orchestrator-workers

A higher-level LLM generates a plan, then assigns them to sub-agents, and synthesizes the results. The Orchestrator workflow automatically parallelizes steps that can be done in parallel, and blocks on dependencies.

[!NOTE]

finder_agent = Agent(name="finder", server_names=["fetch", "filesystem"])

writer_agent = Agent(name="writer", server_names=["filesystem"])

proofreader = Agent(name="proofreader", ...)

fact_checker = Agent(name="fact_checker", ...)

style_enforcer = Agent(name="style_enforcer", instructions="Use APA style guide from ...", server_names=["fetch"])

orchestrator = Orchestrator(

llm_factory=AnthropicAugmentedLLM,

available_agents=[finder_agent, writer_agent, proofreader, fact_checker, style_enforcer],

)

task = "Load short_story.md, evaluate it, produce a graded_report.md with multiple feedback aspects."

result = await orchestrator.generate_str(task, RequestParams(model="gpt-4o"))

print(result)

Swarm

OpenAI has an experimental multi-agent pattern called Swarm, which we provide a model-agnostic reference implementation for in mcp-agent.

The mcp-agent Swarm pattern works seamlessly with MCP servers, and is exposed as an AugmentedLLM, allowing for composability with other patterns above.

[!NOTE]

triage_agent = SwarmAgent(...)

flight_mod_agent = SwarmAgent(...)

lost_baggage_agent = SwarmAgent(...)

# The triage agent decides whether to route to flight_mod_agent or lost_baggage_agent

swarm = AnthropicSwarm(agent=triage_agent, context_variables={...})

test_input = "My bag was not delivered!"

result = await swarm.generate_str(test_input)

print("Result:", result)

Advanced

Composability

An example of composability is using an Evaluator-Optimizer workflow as the planner LLM inside the Orchestrator workflow. Generating a high-quality plan to execute is important for robust behavior, and an evaluator-optimizer can help ensure that.

Doing so is seamless in mcp-agent, because each workflow is implemented as an AugmentedLLM.

optimizer = Agent(name="plan_optimizer", server_names=[...], instruction="Generate a plan given an objective ...")

evaluator = Agent(name="plan_evaluator", instruction="Evaluate logic, ordering and precision of plan......")

planner_llm = EvaluatorOptimizerLLM(

optimizer=optimizer,

evaluator=evaluator,

llm_factory=OpenAIAugmentedLLM,

min_rating=QualityRating.EXCELLENT,

)

orchestrator = Orchestrator(

llm_factory=AnthropicAugmentedLLM,

available_agents=[finder_agent, writer_agent, proofreader, fact_checker, style_enforcer],

planner=planner_llm # It's that simple

)

...

Signaling and Human Input

Signaling: The framework can pause/resume tasks. The agent or LLM might “signal” that it needs user input, so the workflow awaits. A developer may signal during a workflow to seek approval or review before continuing with a workflow.

Human Input: If an Agent has a human_input_callback, the LLM can call a __human_input__ tool to request user input mid-workflow.

The Swarm example shows this in action.

from mcp_agent.human_input.handler import console_input_callback

lost_baggage = SwarmAgent(

name="Lost baggage traversal",

instruction=lambda context_variables: f"""

{

FLY_AIR_AGENT_PROMPT.format(

customer_context=context_variables.get("customer_context", "None"),

flight_context=context_variables.get("flight_context", "None"),

)

}\n Lost baggage policy: policies/lost_baggage_policy.md""",

functions=[

escalate_to_agent,

initiate_baggage_search,

transfer_to_triage,

case_resolved,

],

server_names=["fetch", "filesystem"],

human_input_callback=console_input_callback, # Request input from the console

)

App Config

Create an mcp_agent.config.yaml and define secrets via either a gitignored mcp_agent.secrets.yaml or a local .env. In production, prefer MCP_APP_SETTINGS_PRELOAD to avoid writing plaintext secrets to disk.

MCP server management

mcp-agent makes it trivial to connect to MCP servers. Create an mcp_agent.config.yaml to define server configuration under the mcp section:

mcp:

servers:

fetch:

command: "uvx"

args: ["mcp-server-fetch"]

description: "Fetch content at URLs from the world wide web"

gen_client

Manage the lifecycle of an MCP server within an async context manager:

from mcp_agent.mcp.gen_client import gen_client

async with gen_client("fetch") as fetch_client:

# Fetch server is initialized and ready to use

result = await fetch_client.list_tools()

# Fetch server is automatically disconnected/shutdown

The gen_client function makes it easy to spin up connections to MCP servers.

Persistent server connections

In many cases, you want an MCP server to stay online for persistent use (e.g. in a multi-step tool use workflow). For persistent connections, use:

connectanddisconnect

from mcp_agent.mcp.gen_client import connect, disconnect

fetch_client = None

try:

fetch_client = connect("fetch")

result = await fetch_client.list_tools()

finally:

disconnect("fetch")

MCPConnectionManagerFor even more fine-grained control over server connections, you can use the MCPConnectionManager.

from mcp_agent.context import get_current_context

from mcp_agent.mcp.mcp_connection_manager import MCPConnectionManager

context = get_current_context()

connection_manager = MCPConnectionManager(context.server_registry)

async with connection_manager:

fetch_client = await connection_manager.get_server("fetch") # Initializes fetch server

result = fetch_client.list_tool()

fetch_client2 = await connection_manager.get_server("fetch") # Reuses same server connection

# All servers managed by connection manager are automatically disconnected/shut down

MCP Server Aggregator

MCPAggregator acts as a "server-of-servers".

It provides a single MCP server interface for interacting with multiple MCP servers.

This allows you to expose tools from multiple servers to LLM applications.

from mcp_agent.mcp.mcp_aggregator import MCPAggregator

aggregator = await MCPAggregator.create(server_names=["fetch", "filesystem"])

async with aggregator:

# combined list of tools exposed by 'fetch' and 'filesystem' servers

tools = await aggregator.list_tools()

# namespacing -- invokes the 'fetch' server to call the 'fetch' tool

fetch_result = await aggregator.call_tool(name="fetch-fetch", arguments={"url": "https://www.anthropic.com/research/building-effective-agents"})

# no namespacing -- first server in the aggregator exposing that tool wins

read_file_result = await aggregator.call_tool(name="read_file", arguments={})

Contributing

We welcome any and all kinds of contributions. Please see the CONTRIBUTING guidelines to get started.

Special Mentions

There have already been incredible community contributors who are driving this project forward:

- Shaun Smith (@evalstate) -- who has been leading the charge on countless complex improvements, both to

mcp-agentand generally to the MCP ecosystem. - Jerron Lim (@StreetLamb) -- who has contributed countless hours and excellent examples, and great ideas to the project.

- Jason Summer (@jasonsum) -- for identifying several issues and adapting his Gmail MCP server to work with mcp-agent

Roadmap

We will be adding a detailed roadmap (ideally driven by your feedback). The current set of priorities include:

- Durable Execution -- allow workflows to pause/resume and serialize state so they can be replayed or be paused indefinitely. We are working on integrating Temporal for this purpose.

- Memory -- adding support for long-term memory

- Streaming -- Support streaming listeners for iterative progress

- Additional MCP capabilities -- Expand beyond tool calls to support:

- Resources

- Prompts

- Notifications

FAQs

What are the core benefits of using mcp-agent?

mcp-agent provides a streamlined approach to building AI agents using capabilities exposed by MCP (Model Context Protocol) servers.

MCP is quite low-level, and this framework handles the mechanics of connecting to servers, working with LLMs, handling external signals (like human input) and supporting persistent state via durable execution. That lets you, the developer, focus on the core business logic of your AI application.

Core benefits:

- 🤝 Interoperability: ensures that any tool exposed by any number of MCP servers can seamlessly plug in to your agents.

- ⛓️ Composability & Customizability: Implements well-defined workflows, but in a composable way that enables compound workflows, and allows full customization across model provider, logging, orchestrator, etc.

- 💻 Programmatic control flow: Keeps things simple as developers just write code instead of thinking in graphs, nodes and edges. For branching logic, you write

ifstatements. For cycles, usewhileloops. - 🖐️ Human Input & Signals: Supports pausing workflows for external signals, such as human input, which are exposed as tool calls an Agent can make.

Do you need an MCP client to use mcp-agent?

No, you can use mcp-agent anywhere, since it handles MCPClient creation for you. This allows you to leverage MCP servers outside of MCP hosts like Claude Desktop.

Here's all the ways you can set up your mcp-agent application:

MCP-Agent Server

You can expose mcp-agent applications as MCP servers themselves (see example), allowing MCP clients to interface with sophisticated AI workflows using the standard tools API of MCP servers. This is effectively a server-of-servers.

MCP Client or Host

You can embed mcp-agent in an MCP client directly to manage the orchestration across multiple MCP servers.

Standalone

You can use mcp-agent applications in a standalone fashion (i.e. they aren't part of an MCP client). The examples are all standalone applications.

Tell me a fun fact

I debated naming this project silsila (سلسلہ), which means chain of events in Urdu. mcp-agent is more matter-of-fact, but there's still an easter egg in the project paying homage to silsila.

Mcp Agent Reviews

Login Required

Please log in to share your review and rating for this MCP.

Related MCP Servers

Discover more MCP servers with similar functionality and use cases

Zed

OfficialClientby zed-industries

Provides real-time collaborative editing powered by Rust, enabling developers to edit code instantly across machines with a responsive, GPU-accelerated UI.

Cline

Clientby cline

Provides autonomous coding assistance directly in the IDE, enabling file creation, editing, terminal command execution, browser interactions, and tool extension with user approval at each step.

Continue

Clientby continuedev

Provides continuous AI assistance across IDEs, terminals, and CI pipelines, offering agents, chat, inline editing, and autocomplete to accelerate software development.

GitHub MCP Server

by github

Enables AI agents, assistants, and chatbots to interact with GitHub via natural‑language commands, providing read‑write access to repositories, issues, pull requests, workflows, security data and team activity.

Goose

Clientby block

Automates engineering tasks by installing, executing, editing, and testing code using any large language model, providing end‑to‑end project building, debugging, workflow orchestration, and external API interaction.

Roo Code

OfficialClientby RooCodeInc

An autonomous coding agent that lives inside VS Code, capable of generating, refactoring, debugging code, managing files, running terminal commands, controlling a browser, and adapting its behavior through custom modes and instructions.

Firebase CLI

by firebase

Provides a command‑line interface to manage, test, and deploy Firebase projects, covering hosting, databases, authentication, cloud functions, extensions, and CI/CD workflows.

Gptme

Clientby gptme

Empowers large language models to act as personal AI assistants directly inside the terminal, providing capabilities such as code execution, file manipulation, web browsing, vision, and interactive tool usage.

DesktopCommander

by wonderwhy-er

DesktopCommanderMCP is a Model Context Protocol (MCP) server that extends Claude's capabilities to include terminal control, file system search, and diff file editing. It transforms Claude into a powerful development and automation assistant by enabling AI to interact directly with your computer's file system and execute terminal commands.